Blogs

Data Verification using AI Tools

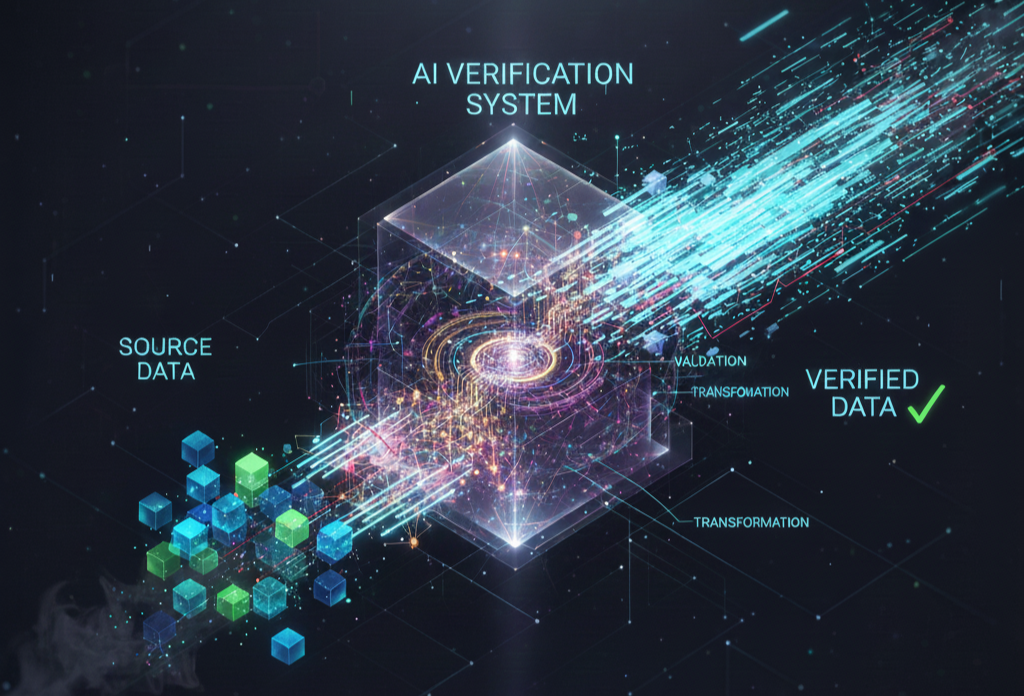

In our Data Extraction project, the requirement soon came in where we had to validate certain information that we had extracted. Previously, this validation process was done by a team of researchers. We were now tasked with automating the process...

Read More

AI Driven Data Extraction

Data extraction has been an integral part of various industries, especially the marketing industry, for years now. Data scraping remains an efficient way of Data Extraction, where automated processes such as scripts, bots, or certain software are developed...

Read More

Interesting LLM News - August 2023

Interesting Paper came out this month about using LLMs as a DBA. D-Bot, an LLM-based database administrator that can continuously acquire database maintenance experience from textual sources, and provide reasonable, well-founded, in-time...

Read More

Interesting LLM News - July 2023

Meta releases Llama-2. It is open source, with a license that authorizes commercial use!This is going to change the landscape of the LLM market. Llama-v2 is available on Microsoft Azure, AWS, Hugging Face and other providers.It could also run on-prem....

Read More

Interesting LLM News - May 2023

Leaked memo from Google makes case that Open Source LLM’s will outpace the achievements and innovations of closed ones including from Google and OpenAI. New Open-Source variants are close to ChatGPT in preference when real humans...

Read More

How crucial is RPA going to be in the coming years?

Robotic process automation (RPA) is the use of software with artificial intelligence (AI) and machine learning capabilities to handle high-volume, repeatable tasks that previously required humans to perform. These tasks can include queries, calculat...

Read More

When Should You Choose Automation?

Automation is always assumed to be the most efficient option when it comes to tech. If you can automate a process, you can make it quicker. After all, it’s the uncomfortable truth that AI and robots can execute a lot of tasks a whole lot quicker than humans can. Even in the case of testing, automation can be a fantastic...

Read More

Machine-learning-based bio-computing (ML), Deep Learning (DL)

Machine learning (ML), especially deep learning (DL), is playing an increasingly important role in the pharmaceutical industry and bio-informatics. For instance, the DL-based methodology is found to predict the drug target interaction and molecule...

Read More

Unlearning and Relearning with Digital Transformation

Digital Transformation across various industries accelerated in 2020 thanks to COVID-19. Not all industries adapted well to this as this transformation was more forced than a voluntary one. Although this digital transformation was quick and staggering...

Read More

Streamlining your business Operations with NoOps

Traditional IT operations (ITOps) is the process of designing, developing, deploying and maintaining the infrastructure and software components of a specific product or application. DevOps is a combination of practices and tools designed to increase an...

Read More

Everything-as-a-Service (XaaS) Solutions Continue to Transform IT Enterprises

Organizations aim for more control over the enterprise IT they use and how they pay for it — and that demand has been met by a shift to service-based delivery models for providing products, capabilities...

Read MoreDetailed Blogs

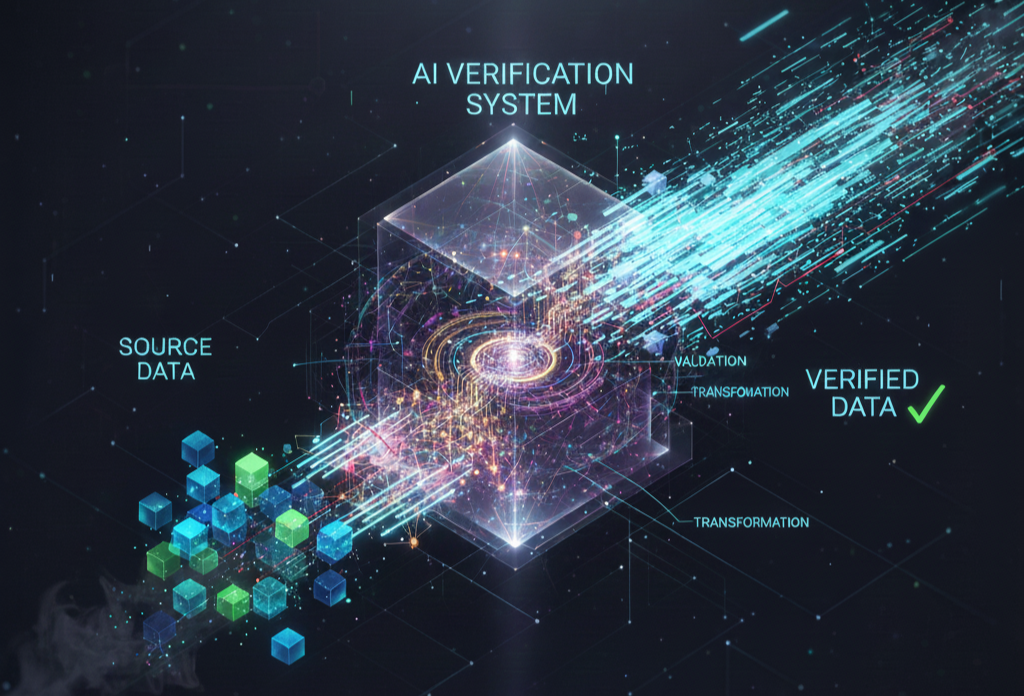

Data Verification using AI Tools

Posted on November 11, 2025

In our Data Extraction project, we soon received a new requirement to validate the extracted information. Previously, this validation process was done by a team of researchers. We were now tasked with automating the process that these researchers would perform, and so we started exploring ways to automate it. This is where we came across Google Gemini’s Grounding capabilities. Google Gemini is Google’s versatile family of multimodal AI models. These models are capable of text generation, image processing, and, in our case, performing web-based research using their Grounding Capabilities.

Google Gemini’s Grounding Capabilities allowed us to verify and validate AI responses using external sources such as Google Search and the web. This approach is a significant step towards enhancing the accuracy of LLM-generated outputs and reducing the risk of hallucinations.

Implementation of this capability was done following some simple syntax code snippets that were provided in the Gemini API’s Grounding Capabilities Documentation (https://ai.google.dev/gemini-api/docs/google-search). This implementation allowed us to automate the process of research and verification by using the prompt in the Gemini API Request Syntax. The prompt should be a detailed set of instructions for research and verification.

Things to consider before implementing such an automated system, based on our research:

- Always provide the prompt using LangChain's ChatPromptTemplate and acquire the output back using LangChain's PydanticOutputParser to obtain a consistent structured response from the LLM.

- Optimize the prompt in such a way as to reduce the number of tokens while still maintaining the clarity of instructions provided.

- Always include any API request in your scripts in Try-Catch statements to catch any API calls that fail or if the output is not returned in the right format.

- Create a well defined base models with all the variables you'd like in the output: Even validation sources can be mentioned here so that the Sources/URLs of the sources that were used to validate the information will also be provided in the outputs.

All LangChain related syntax that can be used for the structure prompt input and structed output can be found in: https://docs.langchain.com/

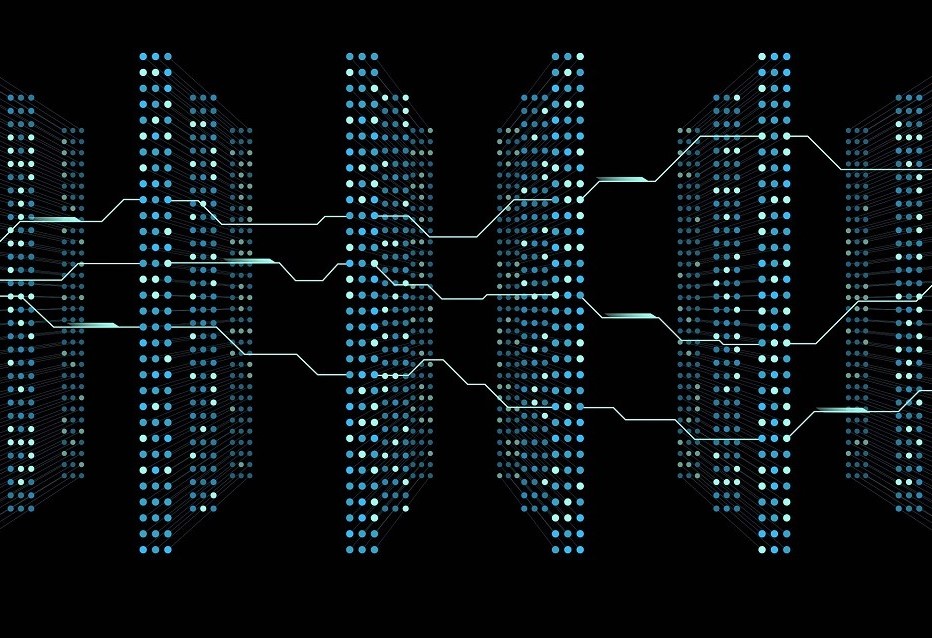

AI Driven Data Extraction

Posted on November 06, 2025

Data extraction has been an integral part of various industries, especially the marketing industry, for years now. Data scraping remains an efficient way of Data Extraction, where automated processes such as scripts, bots, or certain software are developed to collect unstructured information and convert it into structured formats. It is crucial, however, that this process navigates well within the legal and ethical boundaries of intellectual property and privacy.

Earlier, it would’ve taken days to write scripts or create bots that would automate this process of data extraction by scraping. This has changed now, with the recent rise of AI and LLMs’ rapid development capabilities, we can now create these automations within minutes or a couple of hours. We recently worked on a project where Data Scraping had to be implemented to meet a client’s requirements, and it was amazing to see how much time we could save by writing code using AI rather than our traditional ways. While writing code with AI does have its cons, in our experience, we discovered that a thorough human-based code review and testing on all the logic would help bridge the gap between an AI-developed script and a human-developed script.

Given below are some key aspects to be considered while creating data scraping automations using AI:

- Before developing scraping automations, it is necessary to verify whether the source of extraction allows data scraping and our process would comply with their terms of service and copyright regulations.

- Inspect the webpages that need scraping and find the HTML and CSS selectors for our required data by ourselves and pass those on for code development, rather than simply providing the AI with a URL and information on what we’re looking for.

- Always perform thorough code reviews manually without any AI Assistance to make sure that the logic used is simple and efficient.

- It is advised to develop scripts in a small, modular approach for each functionality, as this would make testing and logic revision easier and more efficient.

- Before deployment, strict Regression Testing needs to be performed across the site versions and certain edge cases to ensure reliability.

In data scraping, there are certain cases, as one we faced, where automated browser-based interaction had to be done to extract specific data. For this, the program needs to open the browser and interact with elements to make certain data available for extraction. This is a time-consuming process and often makes the host computer hard to use for a while, as any interaction with the computer or browser might break the extraction process.

To speed up throughput and reduce resource contention in this process, we employed a distributed computing architecture that was spread across a fleet of networked servers. Each node operated in parallel by dividing the scraping workloads, opening different browser instances that automated extraction and navigation while the host machine's interactive session was not fully consumed. This resource-sharing process, helped reduce end-to-end processing time significantly and kept concurrent human tasks usable.

In addition to Data Scraping, there might be cases where the scraped data sometimes needs to be verified. Before the arrival of LLM-based automated online research, this process was done manually. However, with the latest AI LLM-based research capabilities in various models, we became more curious about how we can automate this process for the data we extract. More on that on our next post, stay tuned.

Interesting LLM News - August 2023

Story 1: LLM As DBA?

Interesting Paper came out this month about using LLMs as a DBA. D-Bot, an LLM-based database administrator that can continuously acquire database maintenance experience from textual sources, and provide reasonable, well-founded, in-time diagnosis and optimization advice for target databases.

Link: https://arxiv.org/abs/2308.05481

Story 2: Code Llama released!

This is an Open Source LLM based on the previously released by Meta, Llama 2, specifically tuned for writing code.

- Llama-2 tuned for code generation, debugging

- etc base models, Python-specific, and instruction-tuned.

- 7B, 13B and 33B params models

- Available with the same license as Llama-2

- Blog: https://ai.meta.com/blog/code-llama-large-language-model-coding/

- Paper: https://ai.meta.com/research/publications/code-llama-open-foundation-models-for-code/

- Code: https://github.com/facebookresearch/codellama

- Models: https://ai.meta.com/resources/models-and-libraries/llama-downloads/

Link: https://www.linkedin.com/posts/yann-lecun_large-language-model-activity-7100510408960077825-awib/?utm_source=share&utm_medium=member_desktop

Story 3: OpenAI to allow custom fine-tuning

OpenAI to allow custom fine-tuning of its GPT-3.5 Turbo LLM.

Developers can now bring their own data to customize GPT-3.5 Turbo for their use cases.

Link: https://openai.com/blog/gpt-3-5-turbo-fine-tuning-and-api-updates

Interesting LLM News - July 2023

Story 1: Meta releases Llama-2

Meta releases Llama-2 and it's open source, with a license that authorizes commercial use!

Post from Yann LeCun, VP & Chief AI Scientist at Meta: https://lnkd.in/e-dmJvUq

Here are some interesting highlights: This is going to change the landscape of the LLM market. Llama-v2 is available on Microsoft Azure, AWS, Hugging Face and other providers. It could also run on-prem as needed. Pretrained and fine-tuned models are available with 7B, 13B and 70B parameters.

Llama-2 website: https://ai.meta.com/llama/

Llama-2 paper: https://ai.meta.com/research/publications/llama-2-open-foundation-and-fine-tuned-chat-models/

Story 2: ChatGPT Code Interpreter released

Many different posts, but here is a good post about data analytics: https://lnkd.in/e4Tw48A3

If you have not played with this yet, you should definitely try. This thing is an engineering marvel and can do amazing things with data analytics amongst other things.

Here are some interesting highlights: You can just throw unformatted data from a PDF into it and ask it for analysis, and it figures out the table layout, restructures formats, runs models, & "reasons" about the results. Many data science use cases like visualization and data exploration and pre-processing. Turn an unpolished dataset into a fully-functioning HTML heat map. image001.jpg Visualize a CSV file into a GIF animation. This could be a competitor to BI tools.

Story 3: GPT 4 Details Leaked

GPT 4 details leaked - Quite interesting details on the architecture of GPT-4

Many different sources, but here is a good write up: GPT-4's Leaked Details Shed Light on its Massive Scale and Impressive Architecture | Metaverse Post - https://mpost.io/gpt-4s-leaked-details-shed-light-on-its-massive-scale-and-impressive-architecture/

Here are some interesting highlights: It is estimated to have a staggering total of approximately 1.8 trillion parameters distributed across an impressive 120 layers. OpenAI implemented a mixture of experts (MoE) model in GPT-4. By utilizing 16 experts within the model, they broke ChatGPT into 16 separate models. GPT-4 has been trained on a colossal dataset comprising approximately 13 trillion tokens. OpenAI harnessed the power of parallelism in GPT-4 to leverage the full potential of their A100 GPUs.

Interesting LLM News - May 2023

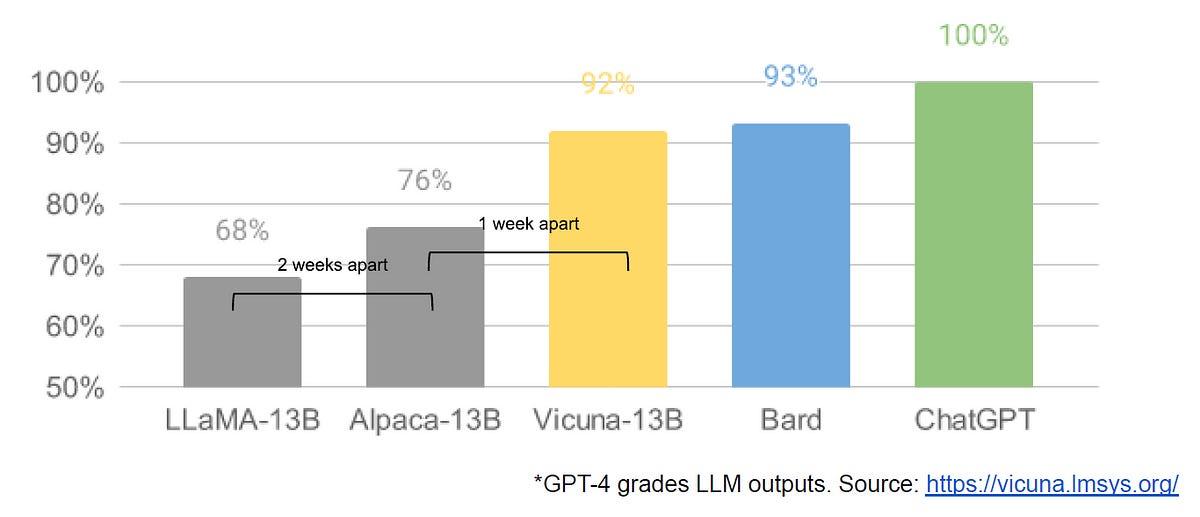

Story 1: Leaked memo from Google

Leaked memo from Google makes case that Open Source LLM’s will outpace the achievements and innovations of closed ones including from Google and OpenAI.

- New Open-Source variants are close to ChatGPT in preference when real humans are surveyed.

- The use of the whole world ingenuity versus a few engineers allows for much faster iteration.

- New training / fine tuning methods such as Low Rank Adaptation (LoRA) allow this process to be stackable instead of training large language models from scratch. This allows this process to be performed on commodity consumer hardware for fraction of the cost (Hundreds of dollars versus Millions of dollars).

Reference: https://www.semianalysis.com/p/google-we-have-no-moat-and-neither

Story 2: Interesting Article regarding RLHF

Interesting Article regarding RLHF: Reinforcement Learning from Human Feedback of Large Language Models

LLM Training process and where RLHF fits in:

Phase 1: Pretraining for completion on a large data set with low quality data (> 1 Trillion tokens)

Phase 2: Supervised finetuning (SFT) for dialogue on smaller data set, but using high demonstration quality data ( 10k – 100k prompt, response)

Phase 3: RLHF – Reinforcement Learning using a Reward model (100k – 1M comparisons of prompt, winning response, losing response)

Reference: https://huyenchip.com/2023/05/02/rlhf.html

Story 3: Sparks of Artificial General Intelligence

Really interesting paper and some related articles - Sparks of Artificial General Intelligence: Early experiments with GPT-4

References: Sparks of Artificial General Intelligence: Early experiments with GPT-4 https://arxiv.org/abs/2303.12712

Some Glimpse AGI in ChatGPT. Others Call It a Mirage https://www.wired.com/story/chatgpt-agi-intelligence/

https://lnkd.in/eJDqDmiV

To discuss more about Large Language Models (LLM), Artifical Intelligence (AI), and Machine Learning (ML), reach out to us at: info@dasfederal.com

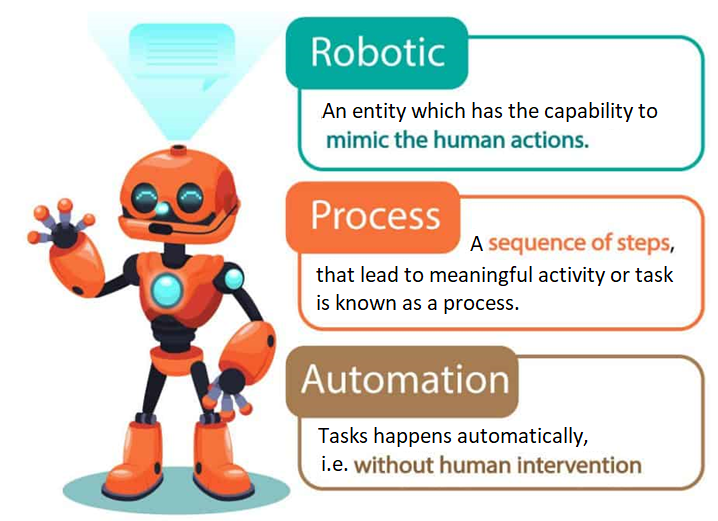

How crucial is RPA going to be in the coming years?

Posted on August 10, 2021

Robotic process automation (RPA) is the use of software with artificial intelligence (AI) and machine learning capabilities to handle high-volume, repeatable tasks that previously required humans to perform. These tasks can include queries, calculations, and maintenance of records and transactions.

Many businesses will be looking towards technology and automation to improve efficiency, reduce costs, and build up organizational resilience. One technology that should be at the forefront of those conversations, is robotic process automation (RPA).

In a recent report, Gartner is forecasting that RPA revenue will reach close to $2 billion this year, and will continue to rise at double-digit rates beyond 2024. As more and more organizations implement it worldwide, RPA is fast becoming a ubiquitous and necessary tool for the competitive enterprise. This is aided by the rise of low-code and no-code solutions, allowing non-developers and non-IT specialists to play a role in driving digital transformation.

Fabrizio Biscotti, Research Vice President at Gartner, highlights just how great the impact of RPA can be for organizations: “The key driver for RPA projects is their ability to improve process quality, speed and productivity, each of which is increasingly important as organizations try to meet the demands of cost reduction during COVID-19.”

The last few years have seen consistent growth in RPA, and Gartner forecasts this to continue well into the future, with 90% of large organizations adopting RPA by 2022.

Gartner cites resilience and scalability as the main drivers for current and continued investment. But RPA provides a multitude of advantages for organizations. Here are some reasons why RPA is important for process automation:

- Free up employees for more valuable tasks– RPA offers the opportunity to free employees up to work on more valuable tasks. Leaving tedious and repetitive work behind, employees can take up the jobs of the future, perhaps upskilling to implement automation and AI to achieve greater outcomes.

- Improve speed, quality, and productivity– RPA bots can be trained to undertake un-intelligent and repetitive tasks faster and more accurately than humans ever could.

- Get more value from big data– many organizations are generating so much data that they can’t process all of it. There are many opportunities to gain insights from this data and drive greater efficiencies. RPA is ideally suited to help parse through large datasets, both structured and unstructured, helping organizations make sense of the data they are collecting.

- Become more adaptable to change– Recovering from the disruption caused by COVID-19 involves organizations becoming more agile and nimble in dealing with change. Resilience and adaptability are central to overcoming current and future challenges. RPA helps organizations speed up processes while reducing costs, ensuring organizations are ready to deal with disruption and change.

The report also indicates that interest in RPA has extended beyond IT departments. CFOs and COOs are opting for RPA as they look to low-code and no-code solutions as the answer to the challenges they face.

So, how do you get into action with RPA?

This is where DAS Federal, LLC partnered with the world’s leading RPA Software Provider UiPath comes in. RPA can be deployed in any type of enterprise. There are numerous use cases ranging from IT infrastructure to procurement services and any other business process, workflow, and analysis. Use cases span all industries and are especially relevant to Federal Government systems and workflows.

DAS Federal has a successful track record of implementing automation technologies at various government agencies and strives to provide our technical expertise on successfully implementing UiPath’s RPA system and its working practices along with providing you with on-time and on-budget delivery.

For more information Contact: info@dasfederal.com

When Should You Choose Automation?

Posted on July 12, 2021

Automation is always assumed to be the most efficient option when it comes to tech. If you can automate a process, you can make it quicker. After all, it’s the uncomfortable truth that AI and robots can execute a lot of tasks a whole lot quicker than humans can.

Even in the case of testing, automation can be a fantastic tool. You can execute hundreds, even thousands, of tests at the click of a button. And with no margin for human error. But, it is important to know if automation is necessary or not in any given situation and it brings us to the following question:

When is the best time to choose automation?

The question was asked to tech professionals from across the globe and here’s what they said:

- When the cost makes sense: The bottom line is if you can save money and still deliver a quality product, cut costs where you can. That’s where automation comes into its own. But automation tools aren’t cheap, so the project size needs to be big enough to justify the cost. What’s more, the test needs to have the longevity required for the cost to make sense as well.

- When you are using repetitive tests: If you are running the same test again and again without changing it, the likelihood is it would be much more time-efficient to automate. That’s because a manual task being repeated regularly wastes your team’s valuable time, and is likely to lead to more errors due to lack of attention.

- When time will be saved: Every engineering and QA team needs more time. Especially when contending with lightning-quick SDLCs, any time-saving activity can have a huge impact on your team’s productivity.

- When quality is sure to be improved: Automation removes the possibility of human error. For that reason, in some scenarios quality can be dramatically improved by the use of automated testing. But you can also run hundreds of tests at once, meaning you will deliver a well-tested product that can be re-tested time and time again.

- When tests are run frequently: Having the capacity to run frequent tests at high volume just isn’t a possibility in many engineering teams. Manual testing can only go so far, especially for smaller teams with no in-house testing team. If you would like to scale up your testing capacity, speak to one of our growth experts today to see what we can do for your team.

- When you need to run multiple tests at once: Running the same manual tests simultaneously is no easy feat. The likelihood of a team having the capacity to run 100 tests at exactly the same time is low. Automation makes this task super-fast and enables teams to test rapidly without feeling the pressure.

Get started on automation with UiPath and DAS Federal, LLC

Automation definitely can improve quality, boost capacity and save your team time. UiPath has a vision to deliver the Fully Automated Enterprise™, one where companies use automation to unlock their greatest potential. UiPath offers an end-to-end platform for automation, combining the leading Robotic Process Automation (RPA) solution with a full suite of capabilities that enable every organization to rapidly scale digital business operations.

DAS Federal Partnered with UiPath aims to bring this AI Automation tool with robots to take care of your employees’ repetitive and time-consuming tasks to free them up for more important work and hence boost their performance and morale. For more information Contact: info@dasfederal.com

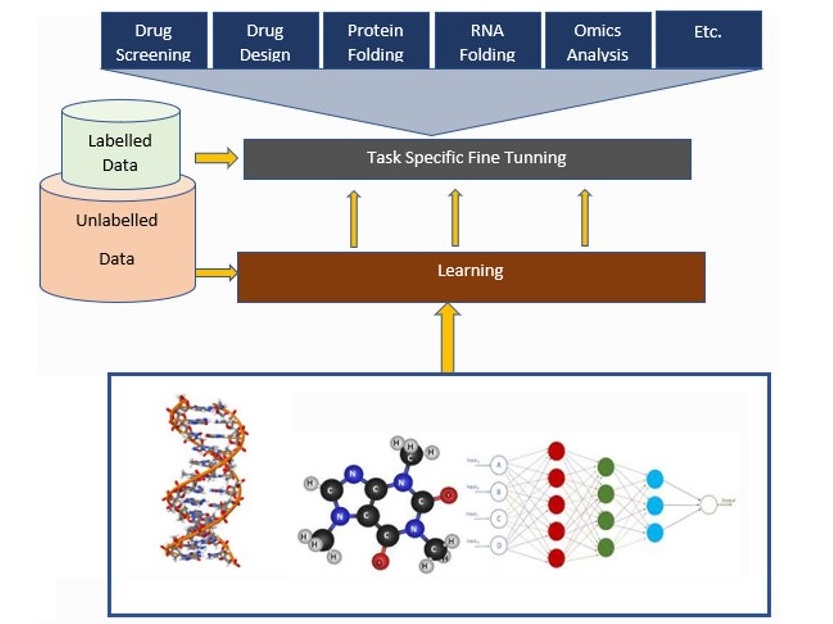

Machine-learning-based bio-computing (ML), Deep Learning (DL)

Posted on May 17, 2021

Machine learning (ML), especially deep learning (DL), is playing an increasingly important role in the pharmaceutical industry and bio-informatics. For instance, the DL-based methodology is found to predict the drug target interaction and molecule properties with reasonable precision and quite low computational cost, while those properties can only be accessed through in vivo/ in vitro experiments or computationally expensive simulations (molecular dynamics simulation etc.) before.

As another example, in silico RNA folding and protein folding are becoming more likely to be accomplished with the help of deep neural models. The usage of ML and DL can greatly improve efficiency, and thus reduce the cost of drug discovery, vaccine design, etc.

In contrast to the powerful ability of DL metrics, a key challenge lying in utilizing them in the drug industry is the contradiction between the demand for huge data for training and the limited annotated data. Recently, there is a tremendous success in adopting self-supervised learning in natural language processing and computer vision, showing that a large corpus of unlabelled data can be beneficial to learning universal tasks. In molecule representations, there is a similar situation. We have a large amount of unlabelled data, including protein sequences (over 100 million) and compounds (over 50 million) but relatively small, annotated data. It is quite promising to adopt the DL-based pre-training technique in the representation learning of chemical compounds, proteins, RNA, etc.

PaddleHelix, a machine learning-based bio-computing framework aimed at facilitating the development of vaccine design, drug discovery, and precision medicine. PaddleHelix is a high-performance ML-based bio-computing framework. It features large-scale representation learning and easy-to-use APIs, providing pharmaceutical and biological researchers and engineers convenient access to the most up-to-date and state-of-the-art AI tools. Code based on Python and C++. The possibilities are endless. Currently models include,

- Vaccine design

- Drug discovery

- Precision medicine

High Proficiency: PaddleHelix provide LinearRNA, a highly efficient toolkit for RNA structure prediction and analysis. LinearFold & LinearPartition achieve O(n) complexity in RNA-folding prediction, which is hundreds of times faster than traditional folding techniques.

Large-scale Representation Learning: Self-supervised learning for molecule representations offers prospects of a breakthrough in tasks with limited annotation, including drug profiling, drug-target interaction, protein-protein interaction, RNA-RNA interaction, protein folding, RNA folding, and molecule design. PaddleHelix implements various representation learning algorithms and state-of-the-art large-scale pre-trained models to help developers start from “the shoulders of giants” quickly.

Example: PaddleHelix provide frequently used components such as networks, datasets, and pre-trained models. Users can easily use those components to build up their models and systems. PaddleHelix also provides multiple applications, such as compound property prediction, drug-target interaction, and so on.

If you'd like to discuss more about ML & DL please reach out to info@dasfederal.com

Desma R. Balachandran

President

DAS Federal, LLC

info@dasfederal.com

Credits to publishing from:

MIT Technology Review

International Society for Computational Biology – Bioinformatics

GitHub Paddle Helix Opensource

Unlearning and Relearning with Digital Transformation

Posted on April 23, 2021

Digital Transformation across various industries accelerated in 2020 thanks to COVID-19. Not all industries adapted well to this as this transformation was more forced than a voluntary one. Although this digital transformation was quick and staggering, it provided us a chance to realize certain mistakes that were made and not to repeat them when redefining digitization in 2021. In 2021, Industries must unlearn the traditional ways and relearn how to optimize their business processes by integrating them with the leading technologies and embrace Digital Transformation. Factors that need to be focused on in 2021 for proper adaptation of Digital Transformation:

- Upskilling of Employees: Digital transformation can only occur with the support of a skilled workforce. During the pandemic, the sudden demand for digitization was not enough for industries to upskill their employees. In fact, unemployment sore higher than ever because many employees lost their jobs due to a lack of skill set necessary for the growing digitization. Learning from this, businesses must now constantly upskill their employees and invest in new training strategies to keep up with the rapid rate of digitization.

- Cybersecurity: With the pandemic, most businesses went online and thus the significance of Cybersecurity was highlighted. The shift to remote work ecosystems increased the data vulnerability across the web interfaces which led to the need for better security measures. Additional security measures should be incorporated using AI, digital identity verification, two-factor authentication, etc., to ensure cybersecurity.

- Automation: Automating Business Processes with Robotic Process Automation (RPA) should be adopted in industries for doing repetitive and mundane tasks rather than handing them over to employees. Using advanced automation technologies, businesses can have further growth and be more efficient. Businesses must invest more in Artificial Intelligence technologies which were highly adapted for a lot of applicational usage in the tech industry during the pandemic.

If you’re looking to Automate, provide CyberSecurity or Training for your Agency, then look no further. DAS Federal (Digital Applied Solutions) provides these High-Quality Services and will help your Agency take the next step in Digital Transformation. For more information contact: info@dasfederal.com

Streamlining your business Operations with NoOps

Posted on March 3, 2021

Traditional IT operations (ITOps) is the process of designing, developing, deploying and maintaining the infrastructure and software components of a specific product or application.

DevOps is a combination of practices and tools designed to increase an organization’s ability to deliver applications and services faster than traditional software development processes. DevOps is the beginning of a bigger, business-critical journey towards a more automated future through AIOps and, ultimately, NoOps.

AIOps introduces data science into the operating model by learning the behaviour of the systems, scaling according to the needs of a platform. Here, the concept of machine learning is introduced to expand the functionalities of DevOps.

Now, as part of a growing trend, CIOs are taking their automation efforts to the next level with serverless computing. In this model, cloud vendors dynamically and automatically allocate the compute, storage, and memory based on the request for a higher-order service (such as a database or a function of code). In traditional cloud service models, organizations had to design and provision such allocations manually. The end goal: to create a NoOps IT environment that is automated and abstracted from underlying infrastructure to an extent that only very small teams are needed to manage it.

NoOps is a fully automated, cloud-based approach that eliminates the operations team, aside from an integrated team focused on life cycle. The self-managed system requires resiliency and a high level of maturity with fail points that have already been addressed.

NoOps shouldn’t be considered similar to outsourcing of IT operations. NoOps is not a platform to be bought. It’s not about moving to SaaS or the cloud and expecting those vendors to run operations. It isn’t about a single technology play, either. It’s not the same as serverless technology, containers, Kubernetes or microservices but, these all do play a role in moving an IT shop further toward NoOps.

NoOps will also provide quick learning on drawbacks, customer and product needs. However, moving toward a self-maintained system requires upkeep for advances and innovation.

NoOps requires multiple technologies and more importantly a reworking of IT processes and workflows where automation, machine learning and even artificial intelligence eliminate not only repetitive tasks but higher-level tasks that workers now handle.

With the level of automation that NoOps provide, CIOs can then invest the surplus human capacity in developing new, value-add capabilities that can enhance operational speed and efficiency.

The future market for NoOps:

Like the other aspects of NoOps, cloud migrations might reduce operations requirements. But it’s probably because other areas of IT aren’t built with the NoOps goal: Hardware failures still aren’t considered a developer’s responsibility; engineers aren’t usually responsible for programming errors.

This all begs the question: Is NoOps designed to get the rest of the IT department to do things in the interest of IT operations, rather than leaving it as a problem for operations to resolve? And if so, shouldn’t we have been doing that all along?

Several cloud providers currently offer serverless platforms that can help enterprises move ever closer to a NoOps state. Amazon, Google, and Microsoft dominate today’s serverless market, but Alibaba, IBM, Oracle, and a number of smaller vendors are bringing their own serverless platforms and enabling technologies to market as well.

If you want to chat about this subject or any other thought provoking one, please get in touch with us at: info@dasfederal.com

Everything-as-a-Service (XaaS) Solutions Continue to Transform IT Enterprises

Posted on February 28, 2021

Organizations aim for more control over the enterprise IT they use and how they pay for it — and that demand has been met by a shift to service-based delivery models for providing products, capabilities, and tools.

What is XaaS? XaaS or Everything-as-a-Service is a new offering associated with the designation “as-a-Service” i.e., a service available through the internet using digital devices upon request. Unlike SaaS (Software-as-a-Service), PaaS (Platform-as-a-Service) and IaaS (Infrastructure-as-a-Service), Everything-as-a-Service is not confined to digital products alone. You can get everything, from food to medical consultations, without leaving your home or office, by utilizing certain online services. Hence the “Everything” is in the name.

With the Pandemic still a threat, the needs for cloud and Everything-as-a-Service (XaaS) solutions have been intensified. But as XaaS gains momentum, competitive advantage may be threatened; adopters should leverage XaaS for advanced or emerging technologies to stay a step ahead. The use of XaaS to boost business agility, in addition to reducing costs and improving workforce efficiency, will continue to strengthen.

XaaS has also helped most of the traditional IT industries to create new business processes, products and services, and business models. It has even changed how we sell to our customers.

The future market for XaaS:

The combination of cloud computing and comprehensive, high-bandwidth, global internet access provides a productive environment for XaaS growth.

Some organizations have been hesitant to adopt XaaS because of security, compliance, and business governance concerns. However, service providers increasingly address these concerns, allowing organizations to bring additional workloads into the cloud.

Leading the way in technology, DAS Federal (Digital Applied Solutions) specializes in providing high quality Information Technology services and consulting to US Federal Agencies. For more information Contact: info@dasfederal.com